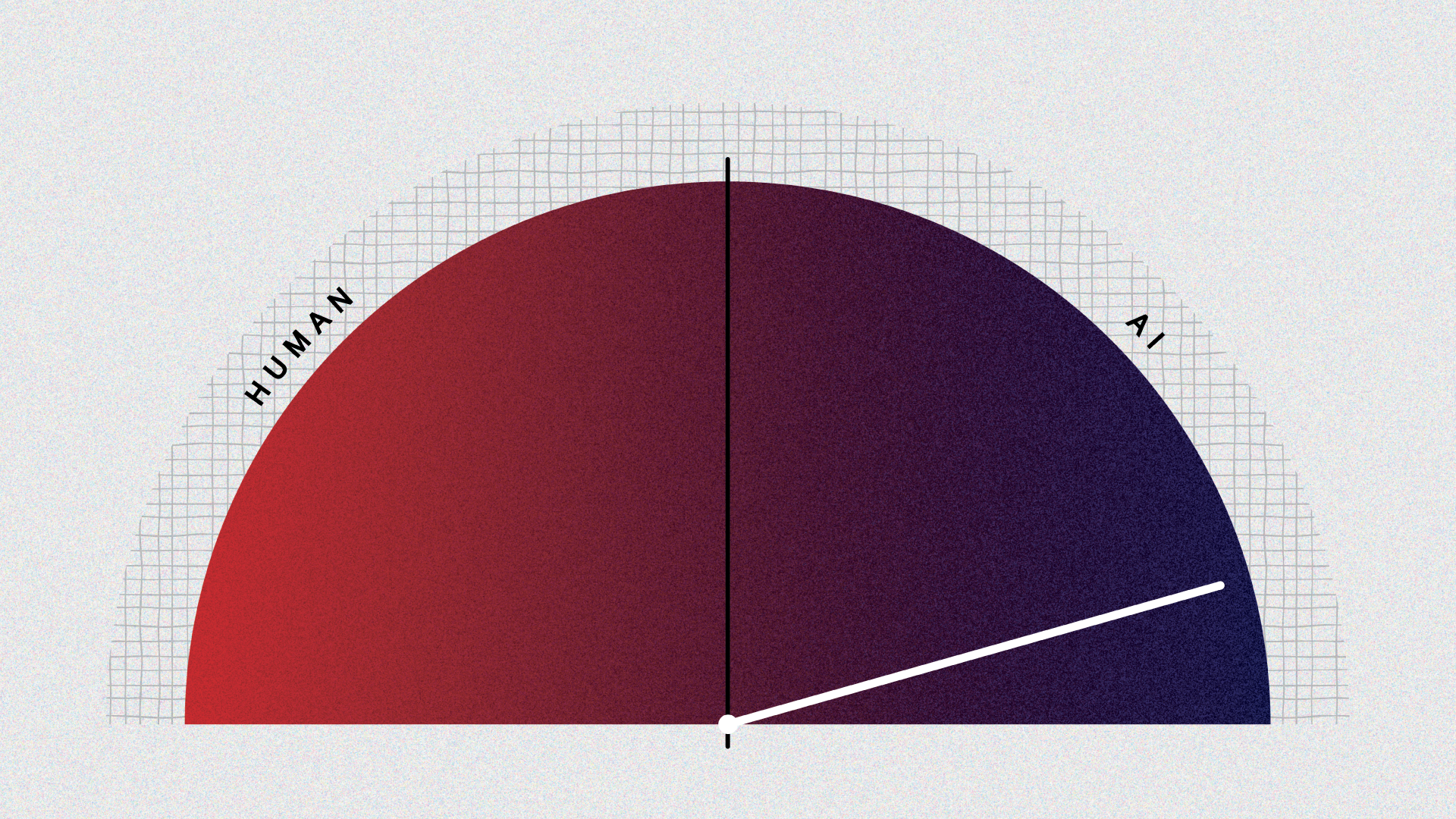

Artificial intelligence is coming for us

Spoiler alert: it’s exciting (and somewhat terrifying).

Gentiana Paçarizi

Gentiana Paçarizi is managing editor at K2.0. She has completed a master’s degree in Journalism and Public Relations at the University of Prishtina ‘Hasan Prishtina’.

This story was originally written in English.